Let’s perform a live, improvised, software-driven audio-visual show without hiding behind our laptop!

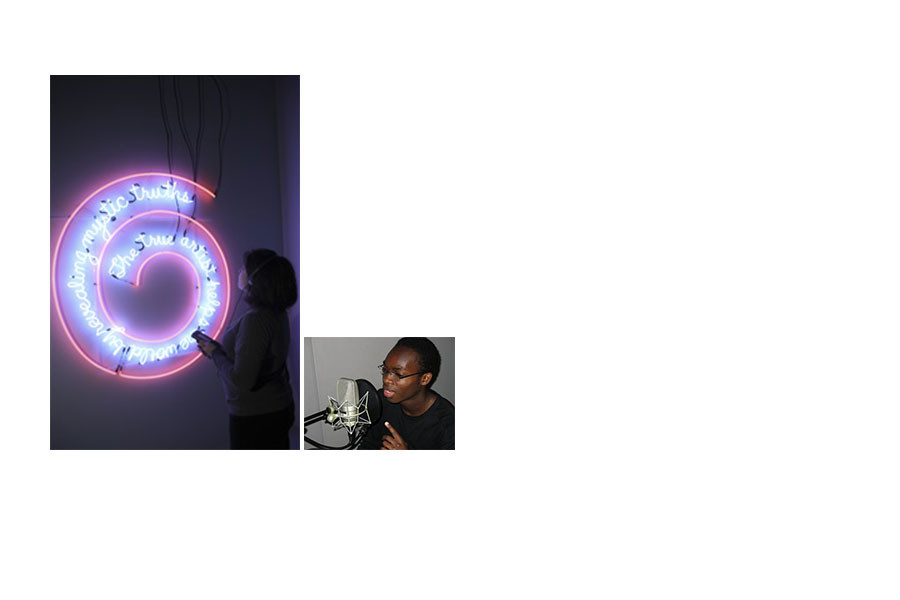

As the visual half of the audio-visual duet ezDAC+corrupt!, I performed a series of live shows, receiving real-time data from the musician that could influence the image, just like I was sending video related “events” that could be connected to sounds.

I designed and coded my own video editing software (a MAX/MSP/Jitter patch). Rather than using video loops (I hate video loops) I would jump cut through video footage I had shot myself, in London, Rotterdam and elsewhere, dynamically altering this footage on the spot. Live data was sent as OSC messages over TCP/IP with an ethernet cable – it was before Wi-Fi!

We started using game controllers on stage because we were bored to go to shows where performers were looking at their laptops: you couldn’t say if they were making live music or checking emails once they had started an mp3 playlist.

This physical interface was very intuitive, with some random and programmed accidents. Anyone, with a little bit of practice, could achieve results in a few minutes. We were just privileged users, familiar with the raw material.

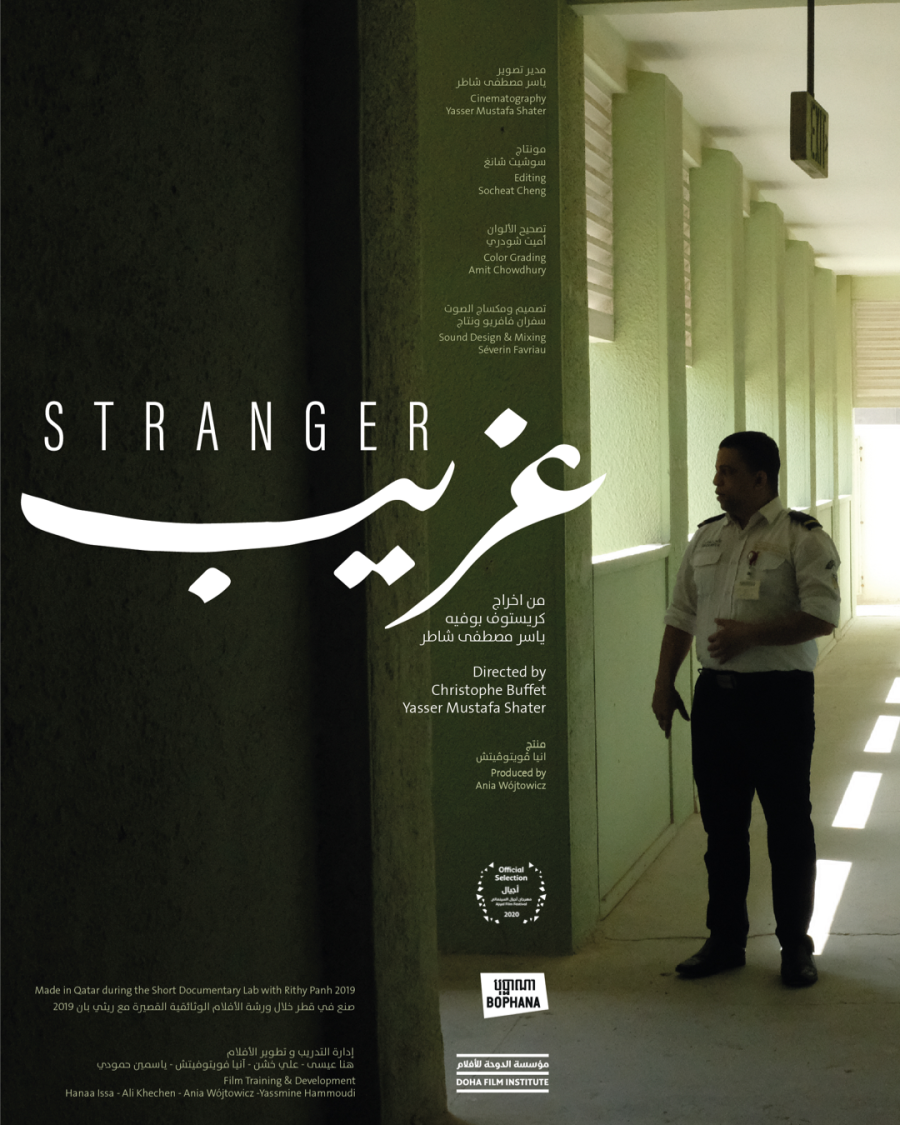

This live exploration of a large collection of rushes, live non-linear editing, was all about control and loss of control. I wrote a short text in French about it on the occasion of the climax -and end- of this project: our doubledash show at the Pompidou in 2003.